Causal Connection: Filling in Lifescience AI Whitespace

Introduction

Machine Learning Models, particularly Large Language Models (LLMs) like PaLM 2, GPT-4, and BERT have revolutionized numerous sectors, including Lifescience, by providing a fresh perspective on understanding, generating, and interpreting human language. However, despite excelling in pattern recognition and text generation, LLMs lack a critical aspect in their operations - causality.

Causality in LLMs

Causality, the relationship between cause and effect, is a fundamental concept enabling understanding and prediction in various contexts. Currently, LLMs lack an understanding of causal relationships, relying on statistical patterns rather than true causation. While LLMs can generate text that appears meaningful, they do not comprehend the causal relationships embedded within the text.

Importance of Causality

In healthcare, understanding causality is crucial. It underlies the entire scientific process of discovering and validating medical interventions. For instance, clinicians and researchers need to understand whether a drug (cause) will effectively treat a disease (effect). Similarly, it's important to identify if a particular lifestyle habit (cause) increases the risk of a health condition (effect). Without a clear understanding of these causal relationships, it is challenging to make accurate predictions or reliable decisions.

Moreover, causality plays a key role in inference and decision-making, crucial elements of life sciences. An LLM could theoretically infer a patient's diagnosis based on their symptoms or recommend a potential treatment. However, without understanding causality, these inferences could be misleading or incorrect. Thus, incorporating a causal understanding into LLMs would significantly increase their potential utility in the Healthcare & Lifescience sectors.

Blueprint

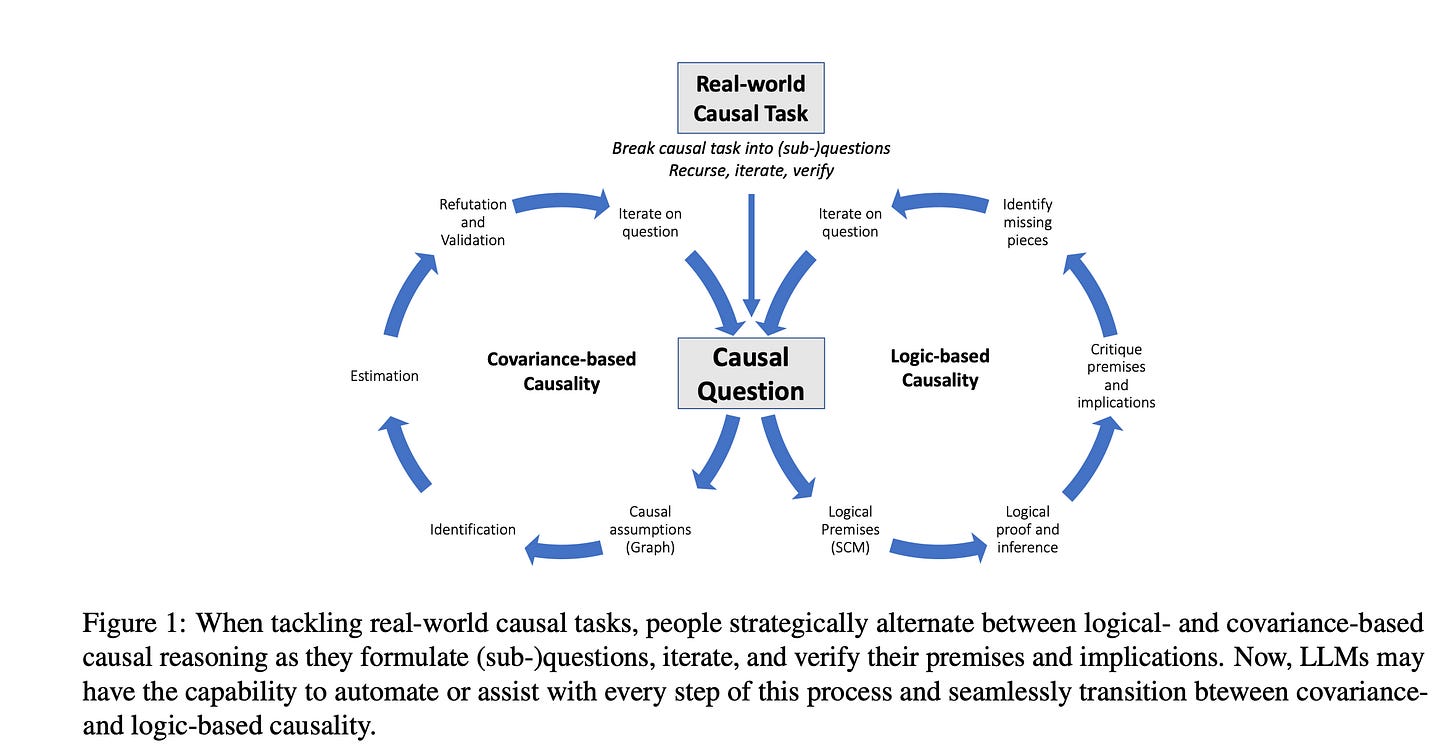

Amidst the hype surrounding AI's disruptive potential in life sciences, the practical implementation of AI solutions remains vague. I envision an 'AI Agent' solution built on top of LLMs as a promising approach. Recent literature from researchers at Microsoft supports this idea, suggesting that LLMs could be used alongside existing causal methods to serve as a proxy for human domain knowledge.

“We envision LLMs to be used alongside existing causal methods, as a proxy for human domain knowledge and to reduce human effort in setting up a causal analysis, one of the biggest impediments to the widespread adoption of causal methods.” [1]

[1]

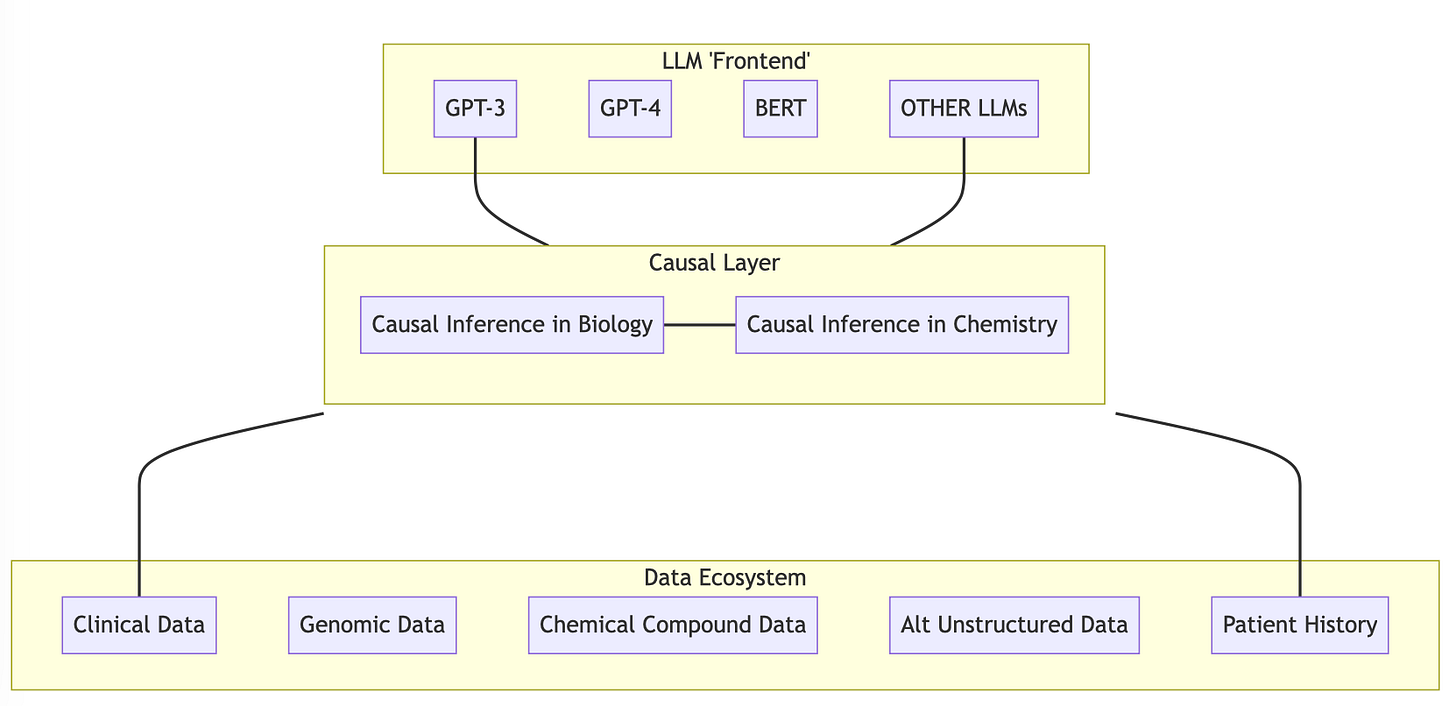

A multi-layered architecture, depicted in the diagram below, presents a solution for making complex causal decisions using LLMs, termed “knowledge-based causal discovery”. Prior to ‘knowledge based causal discovery’, the best known accuracy for causal methods using the benchmark Tu ̈bingen dataset was 83%. Using GPT-4 with prompt engineering researchers were able to boost accuracy to 96.2%. However, challenges such as the 'hallucination effect', where the LLM provided an incoherent argument and incorrect answer, may call for a hybrid approach that combines traditional causal methods with LLMs in the form of ensemble models.

Ensemble models combine the predictions of multiple models to produce a final prediction that often outperforms any single model. In the context of healthcare, where decisions are influenced by multiple factors and can have significant impacts on patient outcomes, ensemble models offer several advantages.

An ensemble model aggregates the outputs of different models, such as LLMs and causal inference models, to leverage their diverse perspectives and strengths. By considering multiple viewpoints, an ensemble model can reduce the risk of biased or inaccurate decisions caused by individual models. Each model within the ensemble may capture unique patterns and insights, and the combination of their outputs can yield a more comprehensive understanding of the complex relationships in healthcare data.

In this multi-layered architecture, ensemble models employ weighting mechanisms to combine predictions and determine consensus. Each model contributes its prediction, and specific weighting schemes incorporate the individual influences of the models. Consensus is determined through aggregation techniques like voting, averaging, or weighted averaging, ensuring a balanced and reliable final decision.

Building the AI Agent

Ok so we have a design, now how can we build our Causality-infused AI Agent?

Understanding Causality: The first step is to understand the concept of causality and how it's different from correlation. A lot of current machine learning, including LLMs, are focused on pattern recognition and correlation. In healthcare, causality is critical. For example, knowing that a patient has a disease and certain symptoms are correlated is not as helpful as knowing that a particular genetic mutation causes the disease.

Augment LLMs with Causal Inference: Once a solid understanding of causality is in place, the next step is to augment LLMs to incorporate causal inference. One way to do this is by layering causal models on top of LLMs. In the diagram, this is represented by the "Causal Layer". This layer will read from the Data Ecosystem and work with LLMs to apply causal inference techniques. The Causal Layer can then guide causal relationships determined by the LLM in turn minimizing ‘hallucination effect’ and determining a consensus. These models can be trained using methods for causal inference such as Causal Bayesian Networks, Structural Equation Modeling, and Do-Calculus.

Data Integration: To utilize the power of causality, it is necessary to have a diverse, rich, and longitudinal data ecosystem. This includes structured data like clinical data, genomic data, and chemical compound data, and unstructured data like patient history and waveform data.

Iterative Improvement and Validation: Applying causal insights obtained from causality-infused LLMs to the drug discovery and treatment plan processes can create a flywheel. This is an iterative process, where the insights derived from the models should be tested in clinical settings, and the feedback from these tests can be used to improve the models.

Lifescience Cases

The applications of Causality-infused LLMs in drug discovery are vast. Some specialized use cases include:

Precision Medicine for Cancer Treatment: Causality-infused LLMs analyze genomic data, patient characteristics, and treatment outcomes to uncover causal relationships between genetic mutations and cancer subtypes. This knowledge enables personalized therapies and reduces unnecessary treatment regimens.

Uncovering Polypharmacology: Causality-infused LLMs analyze chemical compound data and clinical outcomes to identify causal relationships between drug compounds and multiple target interactions. This exploration opens up possibilities for developing multi-target therapies with enhanced efficacy and reduced side effects.

Decoding Drug-Drug Interactions: Causality-infused LLMs explore the causal relationships between drug compounds, patient characteristics, and molecular pathways to predict potential drug-drug interactions. This insight aids in the selection and dosing of multiple drugs for optimal therapeutic outcomes.

Understanding Drug Resistance Mechanisms: Causality-infused LLMs analyze genetic and clinical data to uncover causal relationships between genetic mutations, drug targets, and treatment resistance. These models inform strategies to overcome drug resistance, leading to more effective treatment plans.

Accelerating Drug Safety Assessment: Causality-infused LLMs analyze adverse event data, patient demographics, and treatment variables to identify causal factors contributing to drug-related adverse events. This knowledge enables proactive safety measures during drug development and post-marketing surveillance.

These specific examples highlight the potential of causality-driven LLMs to advance the understanding of complex biological processes and drive more effective and personalized drug discovery approaches.

Conclusion

While LLMs have already shown promise in healthcare, their lack of understanding of causal relationships remains a significant limitation. Incorporating causality into LLMs is crucial for enhancing the effectiveness and reliability of AI applications in healthcare. As we progress in this field, integrating causality into LLMs will likely be a critical step in realizing the full potential of AI in healthcare.

Thanks for Reading.

Email: kunpat15@gmail.com

LinkedIn: Click Here

Twitter: here

Citations:

Kıcıman, Emre, et al. "Causal reasoning and large language models: Opening a new frontier for causality." arXiv preprint arXiv:2305.00050 (2023).

Zhang, Cheng, et al. "Understanding Causality with Large Language Models: Feasibility and Opportunities." arXiv preprint arXiv:2304.05524 (2023).

APA